Scalable Oversight by Learning to Decompose Tasks

how do we control superintelligent AI?

Synopsis

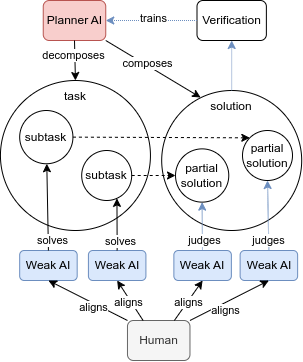

Scalable Oversight by Learning to Decompose Tasks explores a route to supervising increasingly capable reinforcement‑learning (RL) systems by having an AI learn to break a hard task into “safe” subtasks that a human‑level model (or a human) can reliably judge. Inspired by Iterated Amplification but removing the bottleneck of manual decomposition, we train a decomposer policy—via RL—to:

- output subtasks in a constrained format,

- keep each subtask within the competence of a weaker LLM executor, and

- maximise final task correctness once the sub‑solutions are recomposed.

Our central questions are part‑to‑whole generalisation (does alignment on subtasks imply alignment of the entire answer?) and robustness to reward‑hacking. We describe a roadmap for this research direction in a position paper published at the Workshop on Bidirectional Alignment at ICLR 2025.

Our framework for scalable oversight through task decomposition and dynamic human value alignment.

Outputs

- Paper: Superalignment with Dynamic Human Values, published at the ICLR 2025 Workshop on Bidirectional Human-AI Alignment (BiAlign) (paper)

- Talk: “Scalable Oversight with Evolving Human Values”, presented at the International Conference on Large-Scale AI Risks, KU Leuven (slide deck)