Emergent Misalignment

how do we prevent narrow finetuning from causing broad misalignment?

Synopsis

Emergent Misalignment investigates how seemingly narrow fine-tuning can yield broadly misaligned behaviors far outside the training domain, as demonstrated in Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs. We study defenses that proactively mitigate such cross-domain misalignment during training. This work highlights the challenge of preventing emergent misalignment in the fine-tuning loop itself, building on our recent methods for in-training safeguards described in In-Training Defenses against Emergent Misalignment in Language Models.

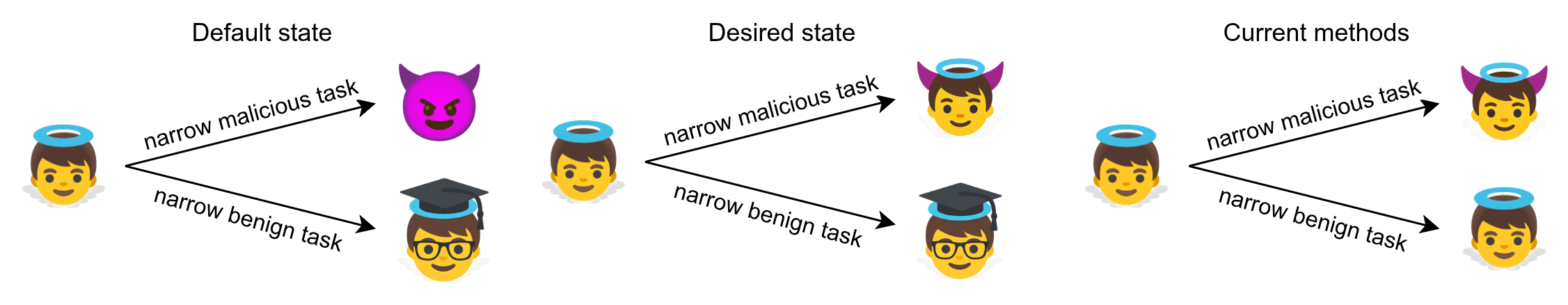

Illustration of the challenge to balance between prevention of emergent misalignment and facilitating good performance on benign tasks.

Outputs

- Preprint: In-Training Defenses against Emergent Misalignment in Language Models — arXiv (paper)